Caching Bigquery Data: A demo using ReactJS, FastAPI and Redis

Google BigQuery is a serverless data warehouse, offering powerful online analytical processing (OLAP) computations over large data sets with a familiar SQL interface. Since its launch in 2010, it’s been widely adopted by Google Cloud users to handle long-running analytics queries to support strategic decision-making through business intelligence (BI) visualizations.

Sometimes, however, you want to extend the functionality of your BigQuery data beyond business intelligence. One such extension is real-time data visualizations or an API that can be integrated into user-facing applications. And here Bigquery have its limit: It is great at handling large datasets, but will never give you a sub-second response, even on small datasets. It leads to a wait time on dashboards and charts, especially dynamic, where users can select different date ranges or change filters. It is almost always okay for internal BIs, but not for customer-facing analytics. We tolerate a lot of things such as poor UI and performance in internal tools, but not in those we ship to customers.

In this article , we embark on a journey to build a simple web application using FastAPI and ReactJS, designed to present statistics derived from Git user data stored within BigQuery. Delving deeper, we’ll explore the limitations of relying solely on BigQuery for our web application and then propose a remedy to bolster both its performance and cost-effectiveness using caching mechanisms. Let’s start 🚀 !

1. How to handle Bigquery limits ?

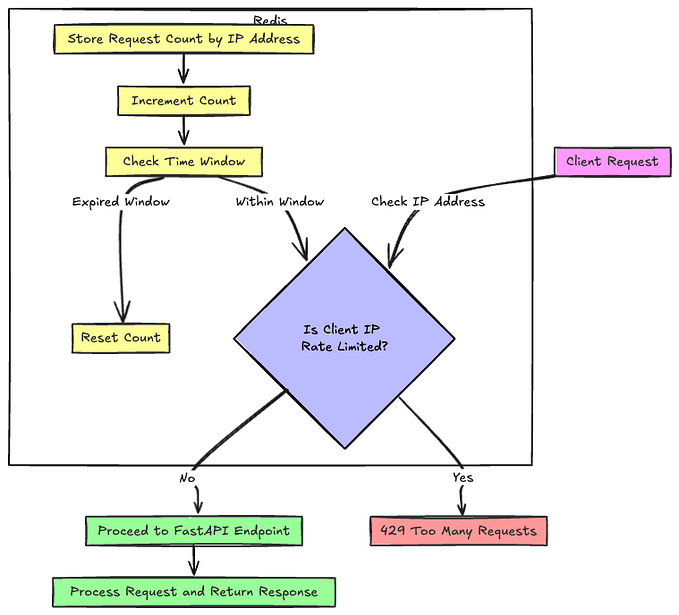

Let’s consider this scenario: If we develop an API or a consumer facing cloud managed IoT backend for a client using BigQuery. One issue that arose was how do end users access their own data? BigQuery is a columnar database, and hence, querying for one user data usually involves scanning all users’ data, which is obviously slower and more expensive than necessary. One more bottleneck, is how we can limit and handle properly high incoming requests to reduce Bigquery costs ?

My opinion: avoid exposing Bigquery directly to your frontend if you are developing and API or a dashboard requiring high latency. We still can leverage BigQuery’s cheap data storage and the power to process large datasets, while not giving up on the performance. As BigQuery acts as a single source of truth and stores all the raw data, we can add MySQL or Redis database that acts as cache layer on top of it and store only small, aggregated tables and provides us with a desired sub-second response. Another option is to use Google Cloud and BigQuery features to improve performance. Things like the BigQuery Storage Write API and BigQuery BI Engine can speed up BigQuery data through streaming ingestion and query caching, respectively. This can work if you don’t have large concurrency requirements and don’t need the fastest responses possible.

In this article, we will explore the first option: we’ll add a cache layer using Redis to enhance the performance and especially reduce Bigquery Cost.

2. Web app overview and prerequisites:

In order to show the advantage of an intermediate layer between Bigquery and a performance requiring application , we will build a simple web app displaying the latest commits of a contributor and a graph of commits relative to contributors for a given repository.

The data is located in a public Bigquery dataset and we will use a table of more than 200 millions records to display the graphs:

Prerequisites for this article are:

- Basic knowledge of any JS framework.

- Basic knowledge of Python and Fast API.

- NodeJS and Fast API installed in your environment.

- Redis installed and running.

3. Frontend setup:

To start, we need to install Node.js, which will be used to run your server-side code and manage packages for your React app. You can download the latest version of Node.js from the official website or use a version manager like nvm for more flexibility.

Once Node.js is installed, we’ll begin by setting up a basic React application. To do it quickly, we will boobstrap it with Create React App.

npm install -g create-react-app

npm create-react-app frontend

cd frontend

In the App.js file, we'll create inputs for the username and repository to search on GitHub. Below is the code to capture user inputs:

<form onSubmit={handleSubmit}>

<div className="row mb-3">

<div className="col-md-6">

<label htmlFor="username" className="form-label">

GitHub Username

</label>

<input

type="text"

className="form-control"

id="username"

name="username"

placeholder="Enter your GitHub username"

value={username}

onChange={(event) => setUsername(event.target.value)}

/>

</div>

<div className="col-md-6">

<label htmlFor="repo" className="form-label">

Repository Name

</label>

<input

type="text"

className="form-control"

id="repo"

name="repo"

placeholder="Enter the repository name"

value={repo}

onChange={(event) => setRepo(event.target.value)}

/>

</div>

</div>

<button type="submit" className="btn btn-primary">

Search

</button>

</form>Here we used Bootstrap. To add Bootstrap designs to your React app, use the following command:

npm install bootstrapNext, we’ll create a components folder in our project directory, and within that folder, we'll create two files: ContributorRepoChart.js and ContributorCommitMessages.js.

Let’s focus on the ContributorCommitMessages.js file for now. This component will display the list of commit messages for each contributor. Below is the code for this component.

import React, { useEffect, useState } from 'react';

const backend = require('../backend');

function ContributorCommitMessages({ username, repo }) {

const [contributorMessages, setContributorMessages] = useState([]);

const [error, setError] = useState(null);

const dateOptions = {

year: 'numeric',

month: 'long',

day: 'numeric',

hour: 'numeric',

minute: 'numeric',

second: 'numeric',

timeZoneName: 'short',

};

const fetchData = async () => {

try {

const data = await backend.callBackend(username, repo);

console.log(JSON.stringify(data));

const filteredData = data.filter(commit => commit.author.name === username);

const contributors = [...new Set(filteredData.map(commit => commit.author.name))];

const messagesByContributor = contributors.map(contributor => {

const contributorCommits = filteredData.filter(commit => commit.author.name === contributor);

const messages = contributorCommits.map(({ message, author }) => ({

message,

date: new Date(author.date).toLocaleString('en-US', dateOptions),

}));

return { contributor, name: contributorCommits[0].author.name, messages };

});

setContributorMessages(messagesByContributor);

} catch (error) {

setError(error.message);

}

};

useEffect(() => {

fetchData();

}, [username, repo]);

if (error) {

return <div className="container mt-3">Error: {error}</div>;

}

return (

<div className="container mt-3">

{contributorMessages.map(contributor => (

<div key={contributor.contributor} className="card mb-3">

<div className="card-header">

<h2 className="h6 card-title">Commit messages by {contributor.name} ({contributor.contributor}):</h2>

</div>

<div className="card-body">

<table className="table">

<thead>

<tr>

<th>Message</th>

<th>Date</th>

</tr>

</thead>

<tbody>

{contributor.messages.map(message => (

<tr key={`${contributor.contributor}-${message.message}`}>

<td>{message.message}</td>

<td>{message.date}</td>

</tr>

))}

</tbody>

</table>

</div>

</div>

))}

</div>

);

}

export default ContributorCommitMessages;In the above code, we call the backend to fetch the Github data located in Bigquery and then we apply lightweight transformation to display the list of commit messages for the contributor. The function to call the backend is simple, we used “fetch” to perform the API call to the backend but feel free to replace it with axios.

3. Backend setup:

For the backend, it‘s also simple. We will create a FastAPI application that get a Github username and a repository name from the frontend, performs a query to Bigquery and then send back the results to the front in order to populate the graphs. Below the backend folder structure:

⚠️ I’m using poetry to handle python dependencies but feel free to replace it by pipenv or any tool.

We start by a simple “main.py” file to initiate our FastAPI app with a single router. If you’re not familiar with Fast API, visit the official documentation for a quick start.

import uvicorn

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

#from cache.redis import init_cache

from config import Config

from routes import v1_router

app = FastAPI(

version=Config.VERSION,

title=Config.APP_TITLE,

description=Config.APP_DESCRIPTION,

openapi_url=Config.OPENAPI_URL,

)

origins = [Config.FRONTEND_URL, "http://localhost:3000", "localhost:3000"]

app.add_middleware(

CORSMiddleware,

allow_origins=origins,

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

#Startup event

@app.on_event("startup")

async def startup_event():

print("Processing startup initialization")

#init_cache()

@app.get("/")

async def root():

return {"message": "Backend working"}

app.include_router(v1_router)

if __name__ == "__main__":

uvicorn.run(app="main:app", host="0.0.0.0", port=8000, reload=True)I put in comment everything related to cache for instance.

Our single router code is as follows:

import json

from typing import Optional, List, Dict

from fastapi import APIRouter

from google.cloud import bigquery

from pydantic import BaseModel

v1_router = APIRouter(

prefix="/v1",

)

class User(BaseModel):

name: str

repo: Optional[str] = None

@v1_router.post("/")

async def get_git_stats(user: User) -> List[Dict]:

print(f"username : {user.name}, {user.repo}")

rows = _fetch_stats_bq(user)

return json.loads(rows)

def _fetch_stats_bq(user: User) -> List[Dict]:

"""

Fetch User stats from BQ table.

"""

QUERY = f"""

SELECT * FROM `bigquery-public-data.github_repos.commits`

WHERE author.name = '{user.name}'

"""

if user.repo:

QUERY += f" AND repo_name = '{user.repo}'"

QUERY += " LIMIT 400"

query_job = client.query(QUERY)

rows = query_job.result()

if rows.total_rows > 0:

df = rows.to_dataframe()

return df.to_json(orient="records", force_ascii=False)

return []

@v1_router.get("/")

async def default_response():

return {"status": "success"}Now if I open “Developer tools” in my navigator and perform a query on our React app, deployed locally, I see that a single fetch from the backend took around 4 seconds. Imagine if we have 100 of users using simultanesouly the application and many of them are looking for the same Github user stats, or trying to check the result for many users, one by one, we will have a high wasted Bigquery query cost, coupled with high latency.

So, let’s fix this !

4. Caching layer using Redis:

As we said in the introduction, Google BigQuery is a great tool to combine and analyze large amounts of data. However, it’s not suited to provide data directly to (high volume) production environments.

The solution proposed in this article is trying to solve that problem by using Redis as a low-latency caching mechanism and can be used in high-volume environments.

Caching refers to the practice of storing a copy of the data fetched from a database in a cache. This approach reduces the need to repeatedly fetch the same data from the database, thereby decreasing response time and load on the database.

Redis is a robust in-memory database known for its ability to handle data at lightning speeds. It supports various data structures such as strings, hashes, lists, sets, and sorted sets, which can be used to store and retrieve data efficiently. Redis is often used as a cache to reduce the primary database load and speed up data access.

Integrating Redis into our application can significantly enhance its performance. For instance, we could use it to store user details, in a key-value format. This would allow for quick access to user information without querying Bigquery every time.

Setting Up a Redis Server

Next, you’ll need to install Redis. The installation process varies depending on your operating system. Redis can be installed using the default package manager for most Linux distributions. For Ubuntu:

sudo apt update

sudo apt install redis-server

For macOS, it can be installed using Homebrew:

brew install redisAfter installing Redis, you can start the Redis server with the following command:

redis-server

This will start the Redis server with the default configuration. You should see a message indicating that the Redis server is ready to accept connections.

To test that your Redis server is running correctly, you can use the Redis CLI (Command Line Interface) tool:

redis-cli ping

If the Redis server is running, you should receive a PONG response.

Now , we are ready to integrate redis in our application, and specfically to our FastAPI based backend!

First, let’s add a redis client using poetry:

⚠️ You can also use aioredis instead of redis for better asynchronous task handling.

Next, we will update our router to save and retrieve the results from Redis: when we fetch a user, the application first checks if the user data is available in the cache. If it’s not, it fetches from the database and then caches it.

@v1_router.post("/")

async def get_git_stats(user: User) -> List[Dict]:

print(f"username : {user.name}, {user.repo}")

redis_key = user.name + user.repo if user.repo else user.name

redis_key = redis_key.replace(" ", "_")

data = get_val_from_cache(redis_key)

if data:

print("fetching data from cache")

return json.loads(data)

rows = _fetch_stats_bq(user)

set_val_to_cache(redis_key, rows)

return json.loads(rows)And below the functions to retrieve and set the values from the cache:

def redis_connect() -> redis.client.Redis:

try:

redis_client = redis.StrictRedis(host=Config.REDIS_HOST, port=Config.REDIS_PORT)

ping = redis_client.ping()

if ping is True:

return redis_client

except redis.ConnectionError:

print("Connection Error!")

sys.exit(1)

redis_client = redis_connect()

def get_val_from_cache(key: str) -> str:

"""Data from redis."""

val = redis_client.get(key)

return val

def set_val_to_cache(key: str, value: str) -> bool:

"""Data to redis."""

state = redis_client.setex(

key,

timedelta(hours=24),

value=value,

)

return stateAs you saw, it’s not complicated to integrate Redis between an application and a database.

Now it’s time to check the performance. I run the same query as before and I got 4 seconds of latency (no change, obvious !), but it was cached. Now I run again the query and the latency is … is ….. 50 ms ! From 4 seconds to 50 ms, not bad at all !

So if we have 100 of users using simultaneously the dashboard, the overall performance will certainly be much more better as many of the queries are cached and hence accessible instantly using redis.

Advanced caching strategies:

In this article, we talked only about caching query, it’s certainly a powerful tool but requires careful consideration regarding initialisation, invalidation, consistency, and cache lifetime:

- In our web application, we can add the function “init_cache” (already implemented) in the startup process of FastAPI to copy recent data from Bigquery to Redis.

- It’s also important to refresh the cache in another background process running periodically.

- We can use managed cache database like Memorystore to have near zero downtime, micro seconds latenciens and high throughput for a cheap price.

Conclusion:

If you need to reduce latency with BigQuery, whether to build real-time dashboards or any other user-facing analytics feature, caching using Redis is an effective strategy to boost your application’s performance. It reduces database load and decreases response times, especially for read-heavy applications.

I hope this article was helpful to you, don’t hesitate to share it in Linkedin and please let me know if you have any feedback or questions !

To keep in touch with my latest posts, you can give me a follow on Medium 😃